angelburst29

The Living Force

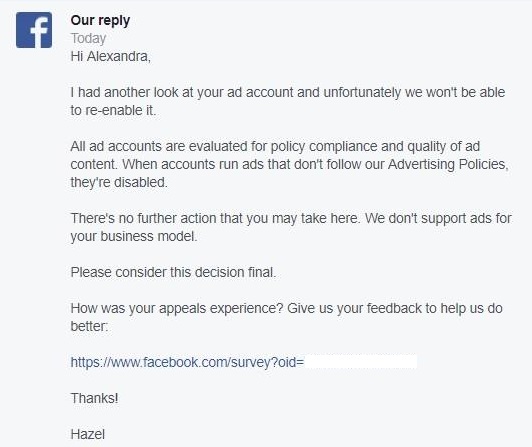

April 12, 2018 - Coincidence? On February 4, 2004, The Pentagon killed a project to amass personal browsing and viewing habits of American citizens. On the same date, Facebook launched. (Pictured graph)

Coincidence? On February 4, 2004, The Pentagon killed a project to amass personal browsing and viewing habits of American citizens. On the same date, Facebook launched. – Investment Watch Blog

Back-dated 02.04.04 - Pentagon kills LifeLog Project

Pentagon Kills LifeLog Project

The Pentagon canceled its so-called LifeLog project, an ambitious effort to build a database tracking a person's entire existence.

Run by Darpa, the Defense Department's research arm, LifeLog aimed to gather in a single place just about everything an individual says, sees or does: the phone calls made, the TV shows watched, the magazines read, the plane tickets bought, the e-mail sent and received. Out of this seemingly endless ocean of information, computer scientists would plot distinctive routes in the data, mapping relationships, memories, events and experiences.

LifeLog's backers said the all-encompassing diary could have turned into a near-perfect digital memory, giving its users computerized assistants with an almost flawless recall of what they had done in the past. But civil libertarians immediately pounced on the project when it debuted last spring, arguing that LifeLog could become the ultimate tool for profiling potential enemies of the state.

Researchers close to the project say they're not sure why it was dropped late last month. Darpa hasn't provided an explanation for LifeLog's quiet cancellation. "A change in priorities" is the only rationale agency spokeswoman Jan Walker gave to Wired News.

However, related Darpa efforts concerning software secretaries and mechanical brains are still moving ahead as planned.

[URL='https://twitter.com/intent/tweet?text=Pentagon%20Kills%20LifeLog%20Project&url=https%3A%2F%2Fwww.wired.com%2F2004%2F02%2Fpentagon-kills-lifelog-project%2F?mbid=social_twitter_onsiteshare&via=WIRED'][URL='https://www.wired.com/2004/02/pentagon-kills-lifelog-project/#comments'][EMAIL='?subject=WIRED%3A%20Pentagon%20Kills%20LifeLog%20Project&body=Check%20out%20this%20great%20article%20I%20read%20on%20WIRED%3A%20%22Pentagon%20Kills%20LifeLog%20Project%22%0D%0A%0D%0Ahttps%3A%2F%2Fwww.wired.com%2F2004%2F02%2Fpentagon-kills-lifelog-project%2F?mbid=email_onsiteshare']

LifeLog is the latest in a series of controversial programs that have been canceled by Darpa in recent months. The Terrorism Information Awareness, or TIA, data-mining initiative was eliminated by Congress – although many analysts believe its research continues on the classified side of the Pentagon's ledger. The Policy Analysis Market (or FutureMap), which provided a stock market of sorts for people to bet on terror strikes, was almost immediately withdrawn after its details came to light in July.

"I've always thought (LifeLog) would be the third program (after TIA and FutureMap) that could raise eyebrows if they didn't make it clear how privacy concerns would be met," said Peter Harsha, director of government affairs for the [URL='http://cra.org/']Computing Research Association.

"Darpa's pretty gun-shy now," added Lee Tien, with the Electronic Frontier Foundation, which has been critical of many agency efforts. "After TIA, they discovered they weren't ready to deal with the firestorm of criticism."

That's too bad, artificial-intelligence researchers say. LifeLog would have addressed one of the key issues in developing computers that can think: how to take the unstructured mess of life, and recall it as discreet episodes – a trip to Washington, a sushi dinner, construction of a house.

"Obviously we're quite disappointed," said Howard Shrobe, who led a team from the Massachusetts Institute of Technology Artificial Intelligence Laboratory which spent weeks preparing a bid for a LifeLog contract. "We were very interested in the research focus of the program ... how to help a person capture and organize his or her experience. This is a theme with great importance to both AI and cognitive science."

To Tien, the project's cancellation means "it's just not tenable for Darpa to say anymore, 'We're just doing the technology, we have no responsibility for how it's used.'"

Private-sector research in this area is proceeding. At Microsoft, for example, minicomputer pioneer Gordon Bell's program, MyLifeBits, continues to develop ways to sort and store memories.

David Karger, Shrobe's colleague at MIT, thinks such efforts will still go on at Darpa, too.

"I am sure that such research will continue to be funded under some other title," wrote Karger in an e-mail. "I can't imagine Darpa 'dropping out' of such a key research area."

Pentagon Wants to Make a New PAL

Pentagon Alters LifeLog Project

A Spy Machine of DARPA's Dreams

Hide Out Under a Security Blanket

[/EMAIL][/URL][/URL][/URL]

Coincidence? On February 4, 2004, The Pentagon killed a project to amass personal browsing and viewing habits of American citizens. On the same date, Facebook launched. – Investment Watch Blog

Back-dated 02.04.04 - Pentagon kills LifeLog Project

Pentagon Kills LifeLog Project

The Pentagon canceled its so-called LifeLog project, an ambitious effort to build a database tracking a person's entire existence.

Run by Darpa, the Defense Department's research arm, LifeLog aimed to gather in a single place just about everything an individual says, sees or does: the phone calls made, the TV shows watched, the magazines read, the plane tickets bought, the e-mail sent and received. Out of this seemingly endless ocean of information, computer scientists would plot distinctive routes in the data, mapping relationships, memories, events and experiences.

LifeLog's backers said the all-encompassing diary could have turned into a near-perfect digital memory, giving its users computerized assistants with an almost flawless recall of what they had done in the past. But civil libertarians immediately pounced on the project when it debuted last spring, arguing that LifeLog could become the ultimate tool for profiling potential enemies of the state.

Researchers close to the project say they're not sure why it was dropped late last month. Darpa hasn't provided an explanation for LifeLog's quiet cancellation. "A change in priorities" is the only rationale agency spokeswoman Jan Walker gave to Wired News.

However, related Darpa efforts concerning software secretaries and mechanical brains are still moving ahead as planned.

[URL='https://twitter.com/intent/tweet?text=Pentagon%20Kills%20LifeLog%20Project&url=https%3A%2F%2Fwww.wired.com%2F2004%2F02%2Fpentagon-kills-lifelog-project%2F?mbid=social_twitter_onsiteshare&via=WIRED'][URL='https://www.wired.com/2004/02/pentagon-kills-lifelog-project/#comments'][EMAIL='?subject=WIRED%3A%20Pentagon%20Kills%20LifeLog%20Project&body=Check%20out%20this%20great%20article%20I%20read%20on%20WIRED%3A%20%22Pentagon%20Kills%20LifeLog%20Project%22%0D%0A%0D%0Ahttps%3A%2F%2Fwww.wired.com%2F2004%2F02%2Fpentagon-kills-lifelog-project%2F?mbid=email_onsiteshare']

LifeLog is the latest in a series of controversial programs that have been canceled by Darpa in recent months. The Terrorism Information Awareness, or TIA, data-mining initiative was eliminated by Congress – although many analysts believe its research continues on the classified side of the Pentagon's ledger. The Policy Analysis Market (or FutureMap), which provided a stock market of sorts for people to bet on terror strikes, was almost immediately withdrawn after its details came to light in July.

"I've always thought (LifeLog) would be the third program (after TIA and FutureMap) that could raise eyebrows if they didn't make it clear how privacy concerns would be met," said Peter Harsha, director of government affairs for the [URL='http://cra.org/']Computing Research Association.

"Darpa's pretty gun-shy now," added Lee Tien, with the Electronic Frontier Foundation, which has been critical of many agency efforts. "After TIA, they discovered they weren't ready to deal with the firestorm of criticism."

That's too bad, artificial-intelligence researchers say. LifeLog would have addressed one of the key issues in developing computers that can think: how to take the unstructured mess of life, and recall it as discreet episodes – a trip to Washington, a sushi dinner, construction of a house.

"Obviously we're quite disappointed," said Howard Shrobe, who led a team from the Massachusetts Institute of Technology Artificial Intelligence Laboratory which spent weeks preparing a bid for a LifeLog contract. "We were very interested in the research focus of the program ... how to help a person capture and organize his or her experience. This is a theme with great importance to both AI and cognitive science."

To Tien, the project's cancellation means "it's just not tenable for Darpa to say anymore, 'We're just doing the technology, we have no responsibility for how it's used.'"

Private-sector research in this area is proceeding. At Microsoft, for example, minicomputer pioneer Gordon Bell's program, MyLifeBits, continues to develop ways to sort and store memories.

David Karger, Shrobe's colleague at MIT, thinks such efforts will still go on at Darpa, too.

"I am sure that such research will continue to be funded under some other title," wrote Karger in an e-mail. "I can't imagine Darpa 'dropping out' of such a key research area."

Pentagon Wants to Make a New PAL

Pentagon Alters LifeLog Project

A Spy Machine of DARPA's Dreams

Hide Out Under a Security Blanket

[/EMAIL][/URL][/URL][/URL]