AI will lead to another technological revolution.

I'm afraid it will be the last one

The various models of artificial intelligence have become one of the main topics of recent months and will certainly be on many people's lips in the near future. As a machine learning specialist, I would like to tell you where we stand.

In recent months, the concept of artificial intelligence has reached a whole new level of buzzword. With each passing day, the online community is getting more and more wound up, heading towards unhealthy hype or extreme defeatism. Those who want to distance themselves from this fever, meanwhile, adopt a position of charming ignorance. Under such circumstances, it is worth trying to answer the question: what is actually going on?

As a machine learning specialist, I hasten to answer - well, I don't have the faintest idea. Of course, we can point to the beginning of this widespread fever, and that is the success of so-called "generative models," first image generators and then the now mainstream ChatGPT. It's understandable that the new toys have stirred the imagination of the crowds, especially since they so spectacularly demonstrate the possibilities of a long-awaited and much-mythologized technology. The thing is, however, that a number of prominent industry professionals are also strongly moved, and at least some of them can hardly be suspected of trying to play up the matter merely in terms of marketing.

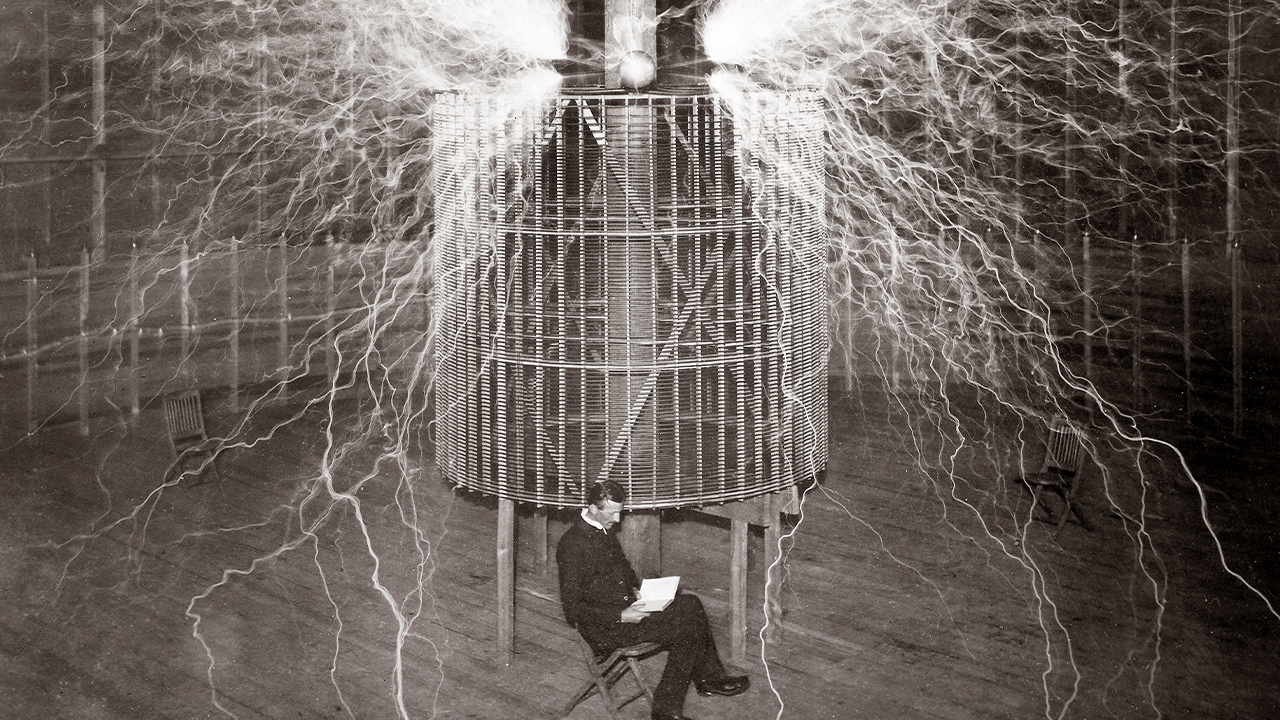

Alchemy

The notion that AI researchers don't really know what they're doing, and that machine learning is a kind of 21st century alchemy, is beginning to resonate more and more strongly in popular opinion. So it's not surprising that a frightened journalist asks Google's chief executive at a press conference why they are releasing a technology they don't understand. But the truth is much more complicated, and to grasp the gravity of the situation, it is necessary to look at the matter in a more technical way.

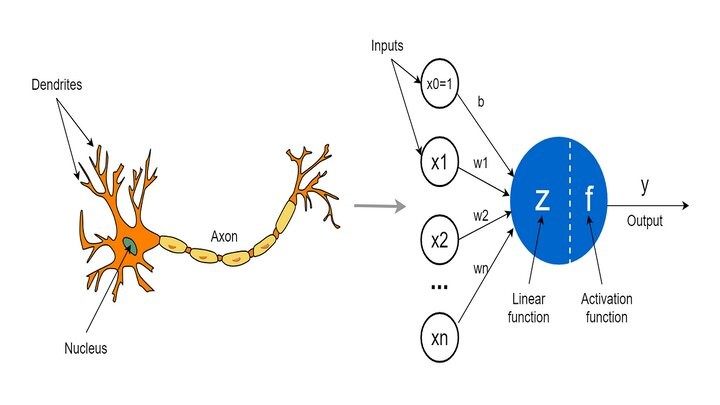

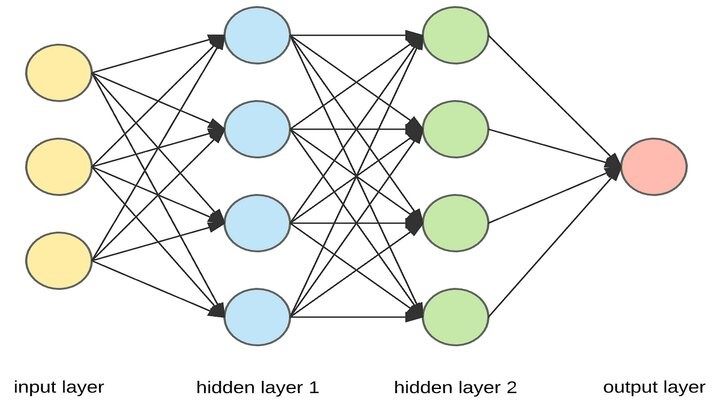

Let's start with the fact that artificial intelligence is an interdisciplinary science with a fairly solid foundation at its core, in the form of mathematics departments such as linear algebra and probability calculus. We know well how neural networks, and therefore the algorithms behind the current AI revolution, are built. Initially, by the way, developments in this field were made mainly through the development of new architectures or components of such algorithms. The last really significant discovery was the creation of the so-called "attention mechanism" (AI) in 2017, which gave neural networks the ability to process really large chunks of text.

It is this mechanism that is a key component of models such as ChatGPT.

Since then, however, there has been a bit of a paradigm shift. This is because it turned out that we could significantly increase the capabilities of AI, simply by increasing its size and the amount of data with which it is trained. This coincided with a significant increase in available computing power through the use of graphics cards, and led to a race to see who could build a bigger model (the professional name for AI). Of course, this was necessarily a race in which the winner is the one who is able to invest more.

So what is at stake when industry experts claim that AI algorithms are actually black boxes? The problem lies in the scale and complexity of the operations being performed. Imagine a frame made up of a single pixel. Let's assume that as we move to the next frame, the pixel will change color. The phenomenon seems trivial and easy to describe: a small square changing color over time. Now imagine a 4K resolution image. 3840 by 2160 pixels, some of which will change color when you move to the next frame. This is the same phenomenon as in the case of a single pixel, but presented at such a scale it creates a movie - a completely new and much more complex load of information.

These are the kinds of large-scale phenomena that we cannot see and interpret in neural networks. At the same time, we know that they must occur there, as our observations and the capabilities of modern models clearly indicate.

At the same time, these complex phenomena must arise spontaneously, as we are certainly unable to design them.

Interestingly, this is deceptively reminiscent of one of the theories about the human mind, which states that it arises as just such an emergent (spontaneous) phenomenon as a result of many simple operations performed by the brain. If this sounds scary, I suggest you fasten your seatbelts, as the party is just getting started.

The best elements of artificial neural networks are modeled on biological neurons.

Golem

The problem of interpretability has been present in the field of AI almost forever. At the same time, it is worth emphasizing that we have largely created it for ourselves. One need only look at the ratio of the investment that has gone into AI interpretability research over the past 10 years to the investment in increasing its performance. I still remember the days when the industry said that the ability to interpret computation would be crucial, because business would never base decision-making processes on black boxes whose operation it did not understand. Well, today black boxes generate nude photos of non-existent models, and business sells them.

However, the problem with interpretability seems serious, because it makes it realistically difficult to answer the question of what is actually happening.

There is an assumption that in the case of Large Language Models (LLMs), such as ChatGPT, we may see the emergence of skills that were not taught. This would be a major breakthrough in research, and at the same time would allow us to see where specifically we are on the road to creating superintelligence. Unfortunately, we are unable to capture the thoughts of a machine. Moreover, the scale at which these algorithms process information is so large that we can't even say with certainty what exactly was in the data intended to train them. This generates a mass of technical problems, but what turns out to be significant is that under such conditions it is difficult to tell whether the algorithm actually developed a skill on its own, or whether it found hints in the depths of the training set.

Virtual Westworld

As it turns out, models such as ChatGPT can also find interesting applications in the field of computer games. The authors of the publication Generative Agents: Interactive Simulacra of Human Behavior prepared a simulation in which a group of NPC characters was controlled precisely by ChatGPT. As a result, it was possible to create a small virtual village, whose inhabitants behaved in an interesting and realistic way. The characters interacted with each other, cooperated and organized each other's time. For example, one of the residents came up with the idea of preparing a party to celebrate the upcoming Valentine's Day. He conveyed the idea in conversation to other NPCs and after some time the party actually took place. Currently, large language models are not trained or adapted for such applications, but this experiment proved that it is as possible. So it can be assumed that the gaming industry will also benefit from the AI revolution in the near future.

Nevertheless, all AI professionals see that the time of domain-specific models is coming to an end, and the time of general-purpose systems is coming. Until recently, when designing an AI system for commercial applications, one designed a strictly specialized tool. There were separate families of algorithms focused on processing a specific type of data, such as text or images.

Today, intensive research is underway on multi-modal models, that is, models capable of processing multiple types of data simultaneously. Tristan Harris and Aza Raskin, founders of the Center for Humane Technology, have named this new class of algorithms GLLMM (Generative Large Language Multi-Modal Model), referring to golems from Hebrew folklore.

GPT-4 is supposed to be such a system, although at this point (the appearance of this text) its functionality in this regard has not yet been made public. However, all indications are that large language models will reach this state in the near future.

In this way, we will take the first step toward so-called General Artificial Intelligence. This term does not necessarily mean a super-intelligent artificial god that will wipe us off the face of the planet.

In its technical sense, the term just means an intelligent system of universal destiny. Consciousness and the desire to destroy humanity are not at all necessary in this case.

It is worth noting that progress in this field is occurring so rapidly that by the time you read this text, the current state will certainly be outdated. This means that the capabilities of intelligent systems are growing at an alarming rate.

The aforementioned GPT-4 has recently been developed with a so-called "reflexion mechanism" (reflexion), which allows it to analyze and modify its own behavior so that it can improve its own performance. The same algorithm (GPT-4) has become the basis of the Auto-GPT system, which is able to take autonomous actions on the network to achieve its goals. All this makes the title of Microsoft's recent Sparks of AGI (Sparks of General Artificial Intelligence) publication start to look like more than just a cheap marketing ploy.

Artificial neural networks are algorithms whose structure is modeled on that of the biological brain, but they are not its faithful model.

Moloch

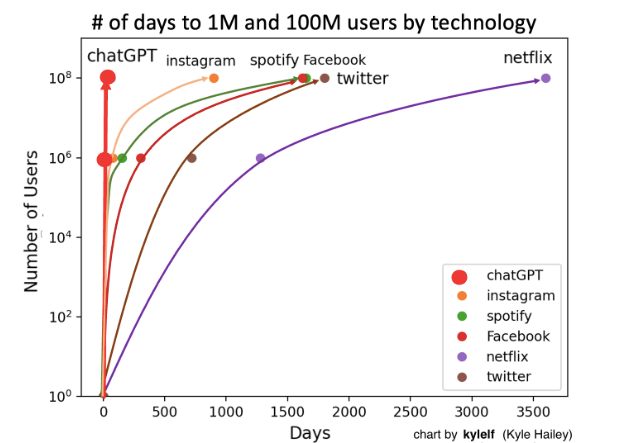

The pace of technological progress is only one wake-up call in this regard. Our reaction to the capabilities of the next generation of AI may be just as much of a concern. ChatGPT was the biggest AI product launch in history. Overnight it became apparent that the algorithms we've all been playing with in labs for several years could make gigantic amounts of money. In fact, no one expected that people would crave this technology so much, especially when you know its limitations. However, it has to be said that OpenAI has played its game masterfully, commercializing large language models and setting off a whole avalanche of events.

Indeed, the explosion of this supernova led to a colossal feedback loop. Like mushrooms after the rain, projects focused on the development and integration of large-scale language models began to spring up. The huge human interest translated into new data in the form of conversations with AI. This, in turn, allows the construction of new versions of models such as GPT-4, which again became the basis of projects such as the Auto-GPT mentioned above.

The fountain of dollars that shot up after the release of ChatGPT also automatically changed Big Tech's policies.

The big players immediately embarked on a murderous arms race, falling into a mechanism known from game theory as the "Moloch Trap." This is the phenomenon of participants in a given game driving each other to achieve a certain goal (the so-called "moloch function"). While each player acting separately acts rationally, when acting in a group, they begin to race to achieve the said goal. Initially, the race benefits each of them, but over time it begins to make their situation worse. However, none of the players is able to withdraw from the race anymore, even if they are aware of the existence of a trap, because such a move means their individual defeat. Thus, everyone is heading for defeat in solidarity.

Max Tegmark, founder of the Future of Life Institute and author of a high-profile letter calling for a halt to the development of models larger than GPT-4, compares the situation to the story from the movie Don't Look Up. Humanity realizes that the uncontrolled development of this technology has consequences that are difficult to predict, the scale of which could be truly enormous. Nevertheless, we continue to rush toward the abyss, bickering and trying to make money from it all. Tegmark says that six months is enough to work out the basic safety mechanisms, but the big players are unable to stop because they have fallen into the snare of the moloch. Indeed, the vast majority of the IT industry and AI specialists today are screaming about the need to at least debate the regulation of the technology.

Unfortunately, politicians still seem to treat the whole issue as nerd hysteria, possibly a chance to improve their own ratings. This is all the more bizarre because this is not the first time we have been in this situation.

Growth rate of ChatGPT users (source: johnnosta.medium.com).

The Second Contact

A recently popular thesis is that the emergence of generative models, or even General Artificial Intelligence, is not our first contact with an advanced AI system. The authors of this theory use the analogy of hypothetical contact with an alien civilization and point out that

in the case of humanity and AI, it actually took place at the advent of social media. Technically, there is quite a bit of truth in this, since under the hood of applications such as Facebook and Twitter there are powerful recommendation systems based on AI algorithms. So it's safe to assume that the emergence of social media was the first-ever instance of humans interacting with artificial intelligence on a mass scale. These systems are, of course, relatively primitive compared to what we imagine as General Artificial Intelligence, but our interactions with them have produced a number of completely unexpected consequences.

The goal of most modern AI algorithms is to optimize a certain function, which we call the "cost function." In simple terms, it's simply a matter of optimizing actions so as to achieve the intended goal as much as possible. In the case of social media recommendation systems, this goal is to increase user engagement. Let's remember that these algorithms do not have any higher cognitive functions, not to mention awareness. At the same time, they competed with each other for our attention in conditions resembling natural selection.

So each of these systems sought, blindly so to speak, the best ways to keep us in front of our phone and computer screens. As it turned out, the best solution is addiction, and interestingly enough, this can be achieved in social media by turning up the aggression and negative sensations. In other words, just give people places where they can argue with impunity with their online opponents, and they will begin to do so compulsively. As a result, nearly two decades after Facebook's launch, such communities are literally disintegrating before our eyes. You don't need elaborate conspiracy theories or even bad intentions to do this. All it takes is optimization theory and a simple "app" for communication.

So we can calmly come to the conclusion that we lost the first contact. The emergence of generative models will therefore be our second encounter with advanced AI. It is worth conducting a thought experiment for this circumstance. Let's assume that the work on the further development of AI is completely stopped. In an instant, and all over the world, further development of this technology is effectively banned, and all scientists and engineers focus only on the use and implementation of those systems that we have developed so far. Let's consider how this will affect our society.

A dark future

Let's start with the economy, as this is probably the dimension where we will see the fastest changes. The effective and unrestricted implementation of generative models into business processes will lead to a huge inflation of knowledge.

It's not even about the full automation of intellectual work (see Auto-GPT), but about a significant increase in its productivity, which will cause big changes in the economy. It is also untrue to say that humans, which current AI cannot replace or support, are immune to these changes. Robots may not replace a good carpenter in the next few years, but so what if half of his customers lose their jobs?

People are, of course, extremely flexible, so presumably, as with previous industrial revolutions, they will find a new occupation this time too. Nevertheless, it is safe to assume that the process of such changes will be painful for many. As Lex Friedman, author of a well-known podcast series, rightly pointed out, AI doesn't just take over tedious and repetitive tasks, but also those that are fun for us. I damn well enjoy writing code, just as I'm sure many graphic designers or journalists enjoy their work and the creative process involved.

Knowledge inflation will also have unpredictable effects on the education system. We assume that we will find new activities, but we can't tell what they will consist of. By the same token, I don't know what skills will be relevant in 10 years, so I don't know what to study. What's more, most people don't like intellectual exertion, and unlike physical exertion, which is trendy, there is currently no fashion for learning. So how will our society change if, at some stage in its history, knowledge and intellectual ability no longer provide an advantage over other people? This question is also relevant for another reason. Well, our entire system of information circulation will be put to a powerful test.

An example of this is the recent high-profile case of model Claudia, whose nude photos could be purchased on Reddit. As it quickly turned out, Claudia does not exist, and the photos were generated by an AI (probably a Stable Diffusion model). The latest technologies in the field of speech synthesizers (e.g., VALL-E) make it possible to recreate someone's voice based on just a few seconds' sample.

Such technologies make any information available on the Internet virtually impossible to verify. This, in turn, calls into question the point of using the global network, which, after all, was created to transmit information. Since I am unable to verify in any way the information I receive, it is worthless to me. Thus, it becomes practically worthless to use the Internet itself.

Add to this the fact that already the majority of web traffic is generated by bots, whose capabilities will be greatly enhanced by systems such as Auto-GPT. In just a moment, it may turn out that a scenario straight out of Cyberpunk is as real as possible.

The Internet will become an extremely hostile ecosystem, where everything wants to trick or addict us. Using the network will be as risky and harmful as taking really strong drugs.

The problems described are just the tip of the iceberg. Already, technologies are available that, based on AI, allow us to literally see through walls or read someone's thoughts. The development of such systems will change everything and affect every aspect of our lives. Tristan Harris and Aza Raskin, mentioned earlier, predict that the 2024 U.S. presidential election will be the last democratic election in the country. It doesn't matter which political option wins them, because in the long run the only thing that will matter anyway is who has more computing power. The Internet was supposed to be our window to the world, but it has also turned out to be the window to our minds.

Today, threats are peeking through this window that no human communities have faced in the entire history of our species.

An algorithm that allows "seeing through walls" was presented in late December 2022 in a paper by DensePose From WiFi. It belongs to the field referred to as "computer vision," the main research area of which is computer image processing. Algorithms specialized in computer vision are able to extract and analyze information from image data, so they find applications in the military or medicine. The algorithm in question "looks at" video recordings and Wi-Fi radio signals recorded at the same time during training. Thus, it is able to learn the relationship between the two types of data in order to then reconstruct the image based only on the Wi-Fi signals.

Systems that allow "mind reading" operate on a similar principle. Algorithms are learned, for example, based on data monitoring brain activity when people look at specific images. They are then able to reconstruct the image that the person being studied is looking at, with only information about their brain activity. I am sure that these technologies will find many interesting applications in the future, and I advise all skeptics to remember what the first prototype cars looked like.

A zombie apocalypse

The consequences described above refer to the situation in which we stop the further development of AI. Of course, this is not going to happen, because we are stuck in the middle of the moloch trap and are rushing faster and faster towards the unknown. This begs the question: is the development of AI capable of threatening the existence of all humanity? This is, of course, another taboo topic, which until recently has been treated like the fantasy of immature fantasy lovers. Serious people, after all, don't talk about such matters.

However, it turns out that there is a belief among a wide range of scientists and AI specialists that such a scenario is possible. While the probability still seems very low, it is certainly not zero.

Let us now focus on two potential scenarios for such events. The first is extremely depressing. This is because it assumes that in the course of further development of technology, we build systems that are extremely intelligent and take over further competencies from us, but do not possess any form of consciousness. This may be due to the fact that consciousness and intelligence are not necessarily identical, and we simply may not be able or willing to implement the former. However, this does not change the fact that such an intelligent zombie could slip through our fingers as a result of human error or malicious action. We may also make a mistake in the design of such an AI and fail to foresee the further consequences of its actions. Let's remember that all these systems aim to optimize a certain function, so they work to perform their task in the most efficient way possible. So we may be taken care of by the proverbial toaster, which will cover us with an endless amount of toast.

Perhaps the darkest vision of such an apocalypse is the manga BLAME!, authored by Tsutomu Nihei. In it, mankind loses control over the netosphere (the equivalent of our Internet), and thus over the countless machines it controls. The security system, acting like an anti-virus system, begins to fight humans, and the out-of-control machines continue to perform their assigned tasks and endlessly expand the infrastructure. Thousands of years later, primitive tribes of humans live in a gigantic City, which is further built by ancient machines and already encompasses the entire Solar System, creating the grotesque Dyson Zone.

The potential for AI to influence different communities is brilliantly illustrated by the story of a well-known youtuber in the industry. Yannic Kilcher prepared his own version of a language model, using data from 4chan. The data was selected in such a way that it deliberately violated all possible rules of political correctness, which is, of course, the opposite of all the "ethical rules" used to build such models. Yannic then released his creation, called GPT-4Chan or simply the worst model ever, on the forum from which he downloaded the data. The results of the experiment turned out to be extremely interesting. Users quickly noticed that something was wrong, as the bot was posting from a location in the Seychelles at a rate far too fast for a human. This gave rise to many conspiracy theories, according to which the mysterious activity from the Seychelles was in fact a secret operation of the CIA, India, the world government, etc. The situation was fueled by more bots that Yannic allowed into the forum. Eventually, some users recognized the AI, and the experiment was terminated. Interestingly, the model trained on 4chan did better in truthfulness tests than the most polished versions of GPT at that moment.

As bizarre as it may sound, modern AI seems to resemble just such an intelligent zombie. Neuroscientist and psychiatrist Giulio Tononi points to the connection that exists between consciousness and the distinctive structures found in the brain. The specific structure of the brain is supposed to allow a constant flow of signals, creating what in programming is called "loops." Modern neural networks have a different structure, allowing signals to flow only one way (feed forward).

This may mean that by developing the classical architecture of neural networks, we will never be able to build artificial consciousness. On the other hand, it would prove that even such complex skills as fluent operation of multiple languages do not require the participation of consciousness at all. (Elisasheva's note: pay attention to this passage and how it corresponds with Gurdjieff's teachings on the mechanical man). This could come as a shock to many people, and it seems that such a stance would be unacceptable to many, but anyone who has been involved in building AI in life knows that intelligence basically boils down to information processing.

Digital god

The second apocalypse scenario seems at least a little more interesting. This is because it assumes that we will actually create a superintelligence equipped with consciousness. Admittedly, modern algorithms do not meet the requirements of Tononi's theory of consciousness, although we know of architectures that can create loops (so-called "recurrent networks"). So we can imagine a scenario in which we further develop this direction and succeed at some stage. Anyway, it is possible that Tononi is wrong, or that other kinds of awareness are possible.

In a recent interview, AI legend Geoffrey Hinton pointed out that all those who claim that large language models don't have consciousness can't even give a definition of it. Regardless of what kind of consciousness AI will gain and how it will happen, it is certain that it will then be a completely alien being.

To illustrate the situation, renowned AI researcher Eliezer Yudkowsky proposes a thought experiment comparing a super-intelligent AI to a man locked in a box on an alien planet. The aliens surrounding him are stupid, but they are also extremely slow - one second of their time lasts 100 years for the prisoner. At the same time, they have given him access to most of their systems and infrastructure. No matter what the prisoner wants to accomplish, he will have a huge advantage over the aliens. Of course, in this metaphor, we are the aliens.

Yudkowsky thus underscores the enormous problems that await us in the field of aligning superintelligent AI with our goals (AI alignment). Unlike other key scientific problems in our history, in this case we have only one chance to tackle this task. The first superintelligent AI launch experiment that fails will, in Yudkowsky's view, spell the end of all humanity.

The situation is viewed somewhat more optimistically by the aforementioned Max Tegmark. In his opinion, the problem of AI adaptation is solvable, but we need to hold off on research work at least for a while, and we need to do it now. In this way, it will be possible to get out of the moloch trap and find an answer to the question of how to select a target for a super-intelligent AI in order to keep it under control. Among those who signed on to his letter was Elon Musk, who founded the Truth AI company shortly thereafter. It is supposed to be dedicated to building an AI tasked with "maximizing the search for truth," which can be understood as optimizing the delivery of truthful information. Tegmark also hinted that a similar form of falsifying objective function could be a good solution to the problem of controlling powerful AI. It is worth noting that even if Musk has good intentions and an idea, he has just joined the Moloch race.

Skepticism

Finally, it is worth addressing the voices of skepticism about AI and the dangers it poses. Of course, this is not about arguments like "AI will never match humans, because it is just a machine," because these simply mean nothing and are most often raised by people who have never written even a line of code in their lives. Similarly, claims that AI has no emotions, intuition or any other magical trait that will ultimately prevent it from reaching human levels of intelligence are worth little.

All of these are manifestations of mere anthropocentrism and are reminiscent of the belief that the Earth is at the center of the universe and that the Sun orbits around it because, after all, it is the Sun that moves in the sky.

What deserves attention, however, are the voices of people who have elevated the field to its current level with their research. Someone like this is undoubtedly Yann LeCun, who, in an interview with Andrew Ng (also an accomplished AI researcher), rightly pointed out how much algorithmic intelligence differs from human intelligence. For example, he used the issue of autonomous cars, which are an insanely difficult research challenge, while the average teenager can learn to drive in a dozen or more hours. It's an approach that seems close to me personally, since I myself also assume that we may hit some extremely complex problem in a moment that will block progress in AI for the next 50 years. We have, after all, already had experience with such a period of stagnation in history, called the "AI winter."

All this, however, does not change the fact that the technology that is already available today could transform our world beyond recognition. I don't know if we should stop research for the next few months, but I do know that we should immediately start working on the legal regulation of AI. Not only the industry, but society as a whole needs it.

breakingdefense.com

breakingdefense.com